Brave Software has launched an experimental AI browsing feature in its Nightly testing channel, marking the company's first major step into agentic browsing, where an AI assistant can actively navigate the web on behalf of users.

The feature is powered by Leo, Brave's integrated AI assistant, and comes with strict privacy and security controls aimed at mitigating the significant risks such browsing models pose.

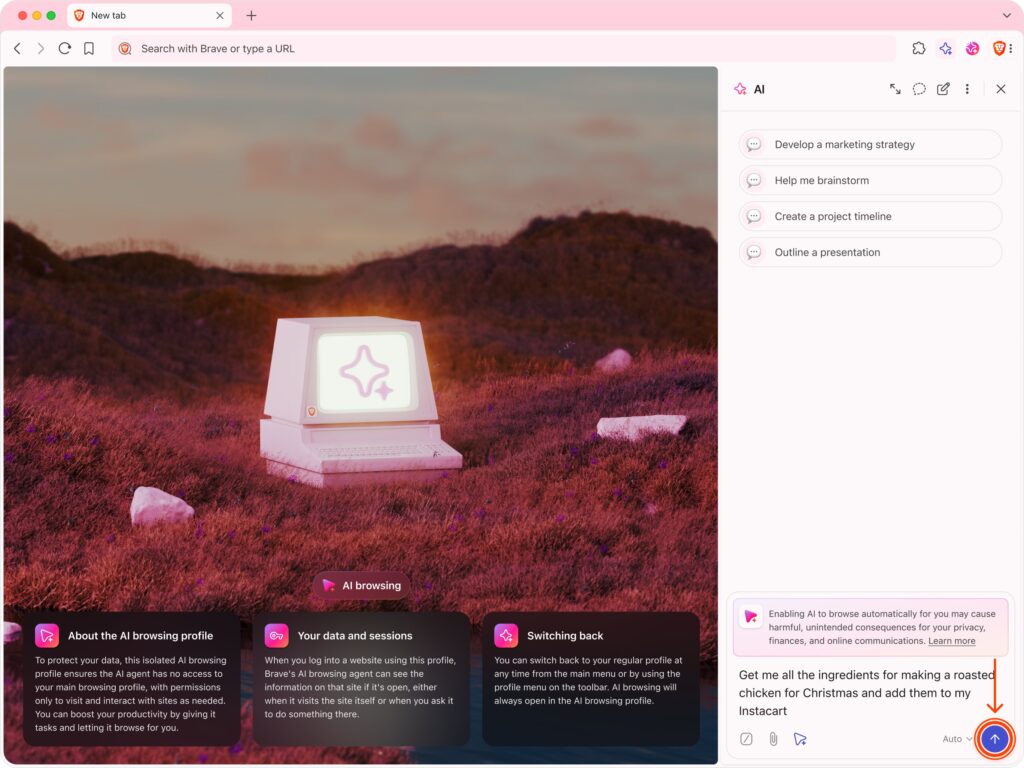

The early release is intentionally limited to Brave Nightly, an opt-in version of the browser intended for developers and testers. The AI browsing experience must be manually enabled via a feature flag and runs in an entirely isolated profile, preventing the AI from accessing the user's regular browsing data, such as cookies, logins, or cached information. This separation ensures that even if the AI behaves unpredictably or is manipulated, the user's sensitive session data remains protected.

Brave Software

Brave Software, known for its privacy-first browser with built-in ad and tracker blocking, is positioning its agentic AI implementation as a safer, more transparent alternative to emerging AI-powered browsers. The company acknowledges, however, that the very concept of letting AI control web actions introduces new categories of risk, most notably, indirect prompt injection attacks and misaligned model behavior, where AI misinterprets user instructions and takes unintended actions.

To address these, Brave's AI browsing integrates several layers of defense:

- Model-based safeguards: A secondary AI model, referred to as the “alignment checker,” reviews the primary model's outputs to ensure they match user intent, similarly to Google's recent approach. This checker is firewalled from raw website data to reduce susceptibility to prompt injection attacks.

- System-level restrictions: AI browsing is barred from accessing internal browser pages, non-HTTPS content, and sites flagged as malicious by Safe Browsing.

- User interface cues and controls: The AI browsing mode is visually distinct, and all agent actions occur in visible tabs. Users must manually initiate AI browsing and can inspect, pause, or delete session logs at any time.

Notably, Brave has opted against implementing per-site permission prompts for now, citing concerns over “warning fatigue,” where users begin to ignore security alerts due to overexposure. Instead, it relies on model-based reasoning to flag and interrupt potentially unsafe actions, prompting users only when necessary.

The feature remains experimental and comes with clear disclaimers, including that AI browsing is non-deterministic and no defense mechanism is foolproof. Brave explicitly warns that even with isolated profiles and model oversight, risks like model confusion or subtle prompt injections cannot be fully eliminated.

Brave is encouraging the security community to participate in hardening the feature. Valid vulnerabilities reported during this early test phase are eligible for double rewards via the company's HackerOne bug bounty program.

For now, users interested in testing AI browsing can do so in Brave Nightly by enabling the “Brave's AI browsing” flag at brave://flags. Once enabled, AI browsing becomes accessible through Leo, the browser's assistant, via a button in the chat input box.

👏👏👏

We want privacy in AI!