New research introduces a neural network-based method for precisely modeling how cameras blur light, exposing unique, device-specific optical patterns, even between identical models.

The technique, dubbed the lens blur field, has practical imaging applications but also raises concerns about privacy and device fingerprinting.

The new method tackles a long-standing challenge in computational photography, which is to accurately model the spatially varying blur, or point spread function (PSF), caused by real-world lenses. These blurs result from factors like defocus, diffraction, and lens aberrations, effects that vary across the image plane and with focus settings.

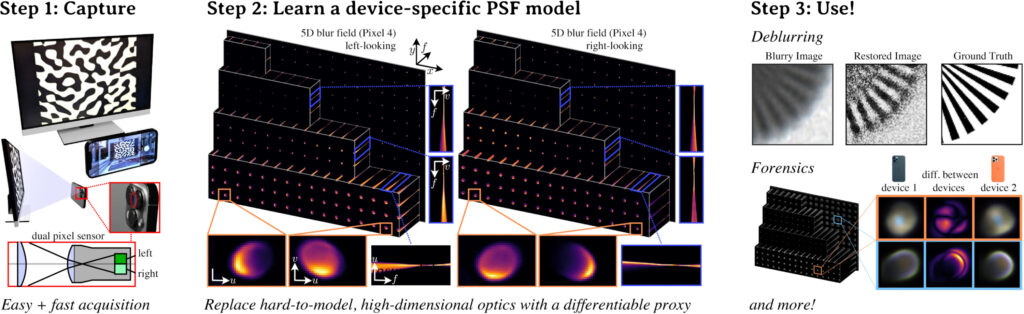

Instead of using traditional parametric or sparse non-parametric blur models, the team proposes a high-dimensional neural representation trained using a multi-layer perceptron (MLP). This model learns how the PSF changes across location, focus, and optionally, scene depth. Crucially, it produces a continuous, compact representation of a device’s blur behavior using only a short capture session.

To calibrate a lens blur field, a user records a few focal stacks (series of images at different focus settings) of known patterns shown on a screen. The MLP is then optimized to match these blurry captures with simulated sharp references. Training can be completed in under five minutes of image capture and around 14 hours of GPU compute, with results stored in just 20 MB.

The researchers provide a first-of-its-kind dataset of 5D and 6D blur fields for various devices, including iPhones, Google Pixels, and Canon DSLR lenses. Their findings show that blur fields are expressive enough to reveal clear differences in optical behavior not just between models, but between individual units of the same model. For instance, two iPhone 14 Pros exhibit measurable PSF differences, forming what the authors describe as blur signatures.

These signatures are accurate enough to inform depth estimation, improve image restoration, and enable synthetic rendering with device-specific blur. In comparisons, the learned blur fields significantly outperform classic models like Seidel aberration coefficients and prior optimization-based deblurring methods.

Privacy implications

While the paper does not touch on the subject of privacy, its findings have serious implications for user anonymity. The ability to recover a unique blur signature from a camera, even among mass-produced devices, introduces a novel form of hardware fingerprinting.

Unlike software-based identifiers or traditional hardware IDs, lens blur signatures are:

- Persistent: They do not change across app sessions or device resets.

- Unspoofable: They reflect physical variations in the lens-sensor system.

- Recoverable passively: In theory, an app or website capturing a few camera frames of known patterns could extract the signature without user awareness.

This opens the door to cross-site tracking, re-identification from shared images, or even forensic attribution based solely on optical behavior, all without access to metadata or sensor noise profiles. Given that smartphones now include powerful cameras by default, this technique could silently undermine platform-level privacy protections.

For privacy-focused communities, the lens blur field joins other hardware-level identifiers, like microphone response curves or PRNU noise, as potential threats to user anonymity.

The research team has released their dataset and code on this website, enabling further exploration by the broader community.

Leave a Reply