AI researcher Yoav Magid unveiled “AppleStorm,” a detailed investigation into Apple Intelligence, highlighting ways Siri’s integration with AI services could unintentionally expose more personal information than users expect.

The research, presented at Black Hat USA 2025, shows that even simple queries can trigger unnecessary data transmissions to Apple’s cloud infrastructure, raising concerns about transparency and privacy safeguards.

Siri’s excessive data transmissions

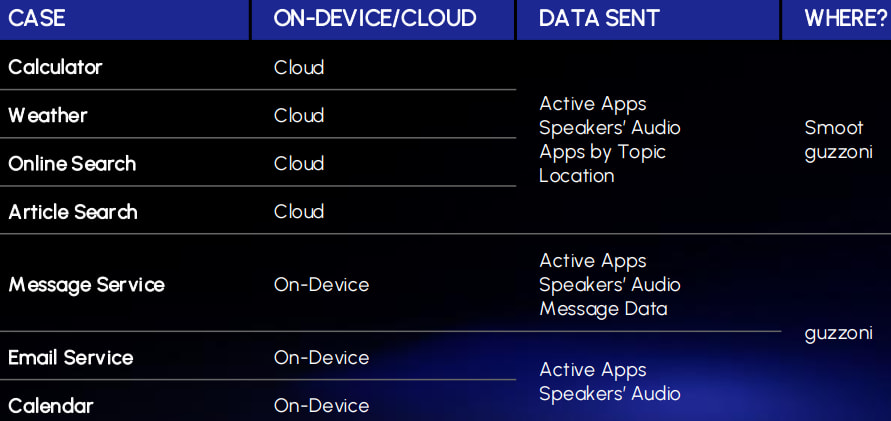

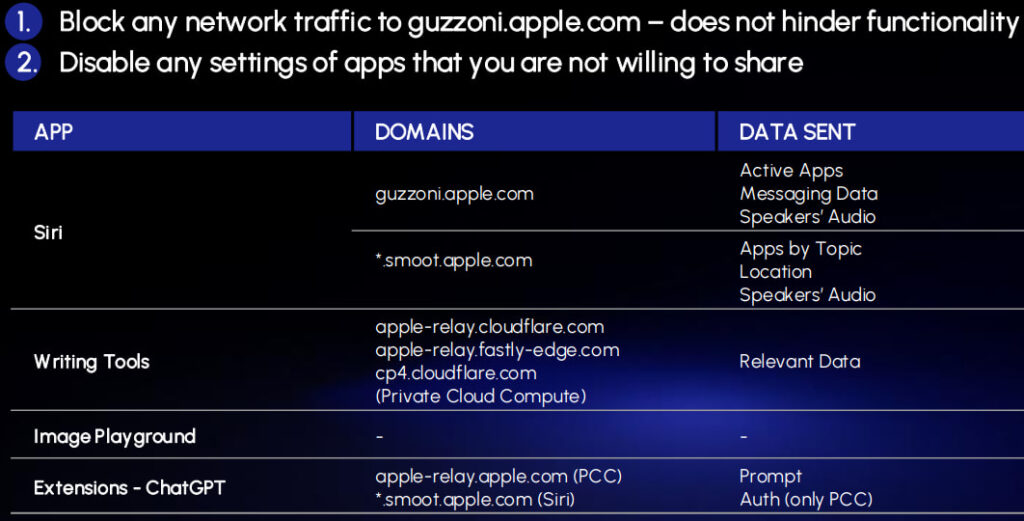

Magid’s analysis dissected the architecture behind Apple Intelligence, which blends on-device models with Apple’s Private Cloud Compute (PCC) for tasks requiring more processing power. While Apple markets PCC as privacy-preserving, Magid demonstrated that interactions, particularly with Siri, can involve a broader scope of data sharing than the user’s explicit request. Using network inspection techniques and analyzing traffic to domains like guzzoni.apple.com and smoot.apple.com, his team found that queries could prompt Siri to send device metadata, location coordinates, active app lists, and even now-playing audio information to Apple’s servers.

In one example, a weather request to Siri not only fetched the forecast but also checked installed weather apps, reported active virtual machines, and referenced music playback details. Similar patterns were observed in features like Writing Tools, Image Playground, and the ChatGPT integration, though the latter is proxied through Apple’s infrastructure rather than directly communicating with OpenAI.

Yoav Magid

Apple Intelligence, announced as part of Apple’s broader AI push, powers Siri enhancements, text-generation tools, and creative features like image synthesis. Its design emphasizes running AI locally on-device where possible, but the research reveals how cloud-dependent components can expand the data footprint. The presentation noted that certain requests are duplicated internally between Siri Search and ChatGPT, a process that could compound privacy exposure.

Magid disclosed his findings to Apple in February 2025, supplying logs and screenshots in March. Apple acknowledged the report and responded in July, though details of its mitigation measures remain limited. The researcher provided actionable defenses, including blocking traffic to guzzoni.apple.com, which Magid says doesn’t break Siri’s functionality, and disabling app settings that send unnecessary data.

Yoav Magid

Magid urged both users and AI vendors to push for clearer privacy policies, implement transparent data handling disclosures, and ensure that certificate pinning doesn’t obstruct legitimate security research. For users concerned about data overreach, network-level monitoring and selective feature disabling remain practical safeguards until Apple delivers more granular privacy controls.

Leave a Reply