Microsoft has uncovered a novel malware strain dubbed SesameOp, which abuses the OpenAI Assistants API as a stealthy command-and-control (C2) channel.

Discovered in July 2025 during an incident response engagement, the backdoor represents a highly sophisticated approach to evasion by embedding itself in legitimate developer tools and blending malicious traffic into benign AI service communications.

The investigation began when Microsoft was called to investigate a suspected espionage operation where attackers had already been entrenched for months. Analysts found a dense network of internal web shells tied to malicious processes implanted through trojanized Microsoft Visual Studio components. The attackers had modified these utilities using .NET AppDomainManager injection, a known defense evasion technique. This method allowed them to silently load malicious libraries into trusted applications at runtime, bypassing conventional security mechanisms.

Further analysis revealed one such embedded implant, SesameOp. Unlike traditional malware that relies on self-hosted or compromised servers for C2, SesameOp communicates with its operators by misusing OpenAI's Assistants API, a legitimate developer-facing interface intended for building AI-powered agents. While not exploiting a vulnerability in OpenAI itself, the threat actor creatively repurposed this API to relay instructions and exfiltrate results without raising suspicion.

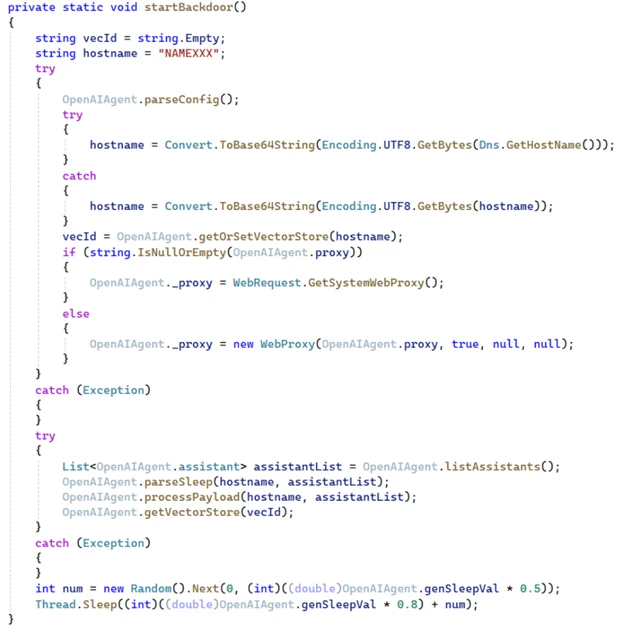

The malware infrastructure centers on two key components: a heavily obfuscated loader (Netapi64.dll) and the primary backdoor module (OpenAIAgent.Netapi64). The loader creates marker files and mutexes to ensure only a single instance runs at a time, then scans the system’s Temp directory for encoded payloads to decrypt and execute. This execution path eventually leads to the launch of the backdoor.

Microsoft

The main payload, OpenAIAgent.Netapi64, does not use OpenAI's models or SDKs directly. Instead, it communicates with the Assistants API using a hardcoded API key found in its configuration. Each compromised host registers itself with OpenAI by creating a new Assistant, named after a Base64-encoded hostname. These Assistants are used to coordinate communications through fields like description, which signal operational states such as “SLEEP”, “Payload”, or “Result”.

When receiving commands, the backdoor fetches them from OpenAI using thread and message IDs, then decrypts and decompresses the instructions using AES and RSA cryptography layered with GZIP compression. It executes these instructions using Microsoft’s JScript VsaEngine, dynamically invoking embedded .NET modules through reflection, a technique that helps evade static analysis and detection.

After executing a command, the results are similarly encrypted, encoded, and sent back to OpenAI as a message. A new Assistant is then created to indicate that the result is ready, again using encoded identifiers to avoid triggering suspicious activity flags.

OpenAI and Microsoft jointly investigated the incident. The offending API key and associated account were swiftly disabled. OpenAI confirmed that the malicious account had not accessed broader AI services or performed model-level queries, and that its usage was restricted to the Assistants API for orchestrating the malware’s operation.

Leave a Reply