For the first time, Windows users can run powerful, ChatGPT-like AI models entirely on their own devices, no internet connection or cloud service required.

OpenAI's new gpt-oss-20B model is now available for local use, and Microsoft has made it easy to run on supported PCs using built-in Windows tools and GPU acceleration.

This marks a major milestone in making cutting-edge AI more accessible and privacy-friendly. Unlike ChatGPT, which operates through OpenAI's servers, gpt-oss-20B is part of a newly released family of open-weight models. These models can be downloaded, inspected, modified, and run locally, giving users unprecedented control over how the AI operates and where their data lives.

The release of gpt-oss-20B and its larger sibling gpt-oss-120B represents OpenAI's most capable open models to date. Trained using the same techniques as the company's frontier models, including GPT-4o, these new models deliver strong performance across tasks like tool use, coding, reasoning, and even health-related queries.

The 20B model, which is now available on Windows, is optimized for edge devices and can run on modern GPUs with at least 16 GB of VRAM. Microsoft has integrated support for the model into Windows AI Foundry, a native framework within Windows 11 that enables local AI development and execution. With Foundry Local and the AI Toolkit for Visual Studio Code, users can run the model entirely on their PCs without sending data to the cloud.

For end users, this means you can now build or use applications powered by ChatGPT-style AI that runs entirely on your own system. This is ideal for offline environments, security-sensitive workflows, or anyone who wants to avoid relying on external servers.

Local AI with real performance

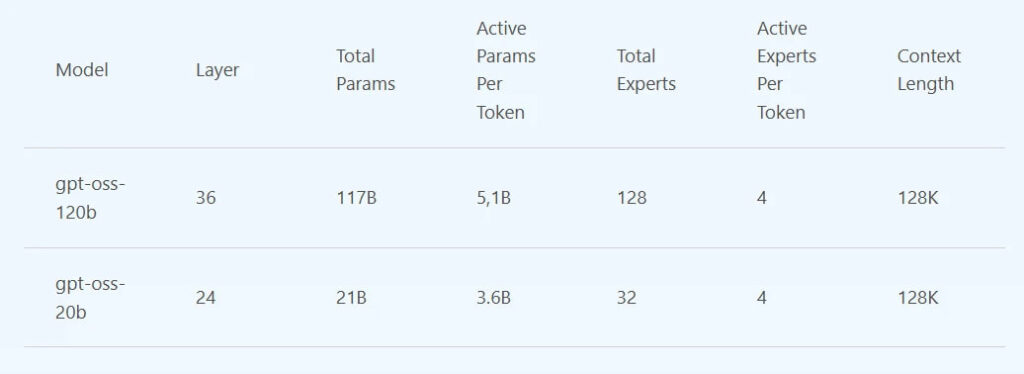

Unlike lightweight or stripped-down models common in open-source projects, gpt-oss-20B is built for real-world use. It uses a mixture-of-experts architecture, activating 3.6 billion parameters per token while maintaining a total parameter count of 21 billion. This design makes the model both powerful and efficient, enabling fast, responsive local inference without massive hardware requirements.

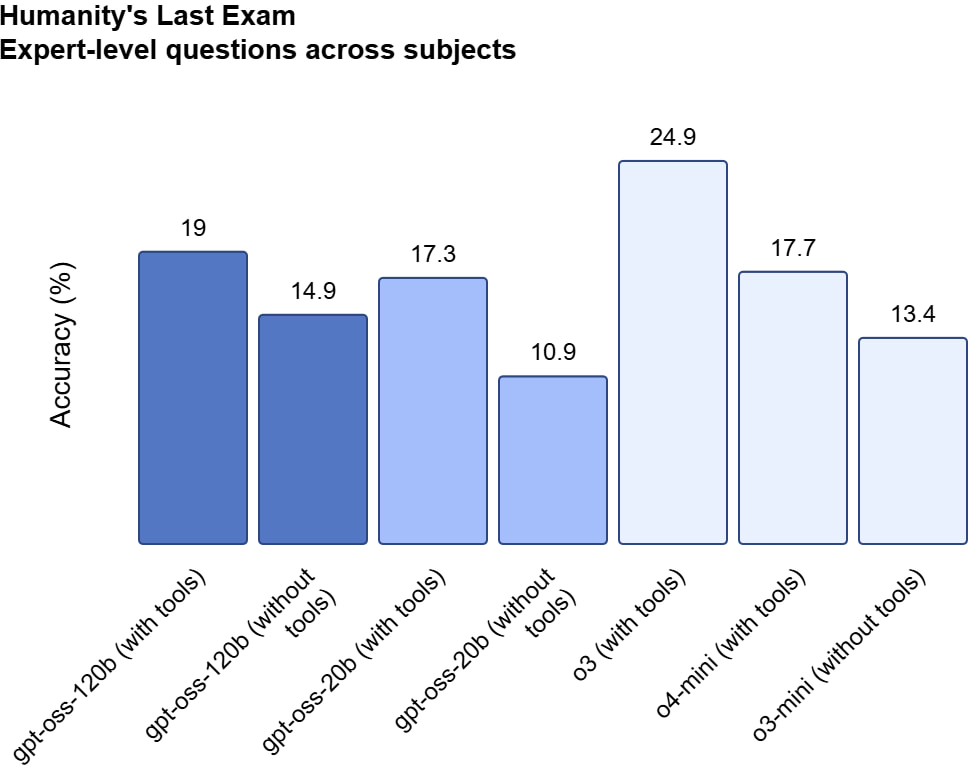

According to OpenAI's evaluations, gpt-oss-20B performs competitively with proprietary models like o3-mini, even outperforming them in areas such as competition math and health-related benchmarks. It also supports agentic behaviors, like executing code or browsing the web (when connected), and is built to follow instructions in natural language with high accuracy.

Unlike ChatGPT, these models aren't wrapped in a polished user interface. Instead, users and developers can embed the model into custom tools, assistants, or offline apps.

The Windows AI Foundry framework, now included in Windows 11, supports deployment of open models like gpt-oss across CPUs, GPUs, and even upcoming NPUs. Foundry Local allows models to be executed securely on-device using standard APIs, with support for quantization, fine-tuning, and model distillation. The integration is powered by Microsoft's ONNX Runtime, a high-performance inference engine that ensures models like gpt-oss-20B can run efficiently on consumer hardware.

The release of gpt-oss brings advanced AI back into the hands of individual users and developers. It's a meaningful shift away from the current cloud-dominated model, where powerful language models are gated behind APIs and usage limits.

While this move unlocks multiple customization options, OpenAI has still included safeguards like safety-focused training and evaluation using its internal Preparedness Framework. Still, these are raw models with no built-in filter or guardrails like in hosted ChatGPT.

If you're on Windows 11 with a modern GPU (16 GB+ VRAM), you can try gpt-oss-20B today using Microsoft's AI Toolkit and Foundry Local tools. The model is free to download under the Apache 2.0 license and can also be explored via platforms like Hugging Face, Ollama, and vLLM. Mac support is coming soon.

Leave a Reply