Google's latest quantum chip, Willow, demonstrates groundbreaking advancements in quantum error correction and computational capabilities, showcasing exponential error reduction and performance benchmarks far beyond the reach of classical supercomputers. However, while its achievements are notable, experts caution against overhyping its immediate implications, especially in fields like encryption.

Google Willow

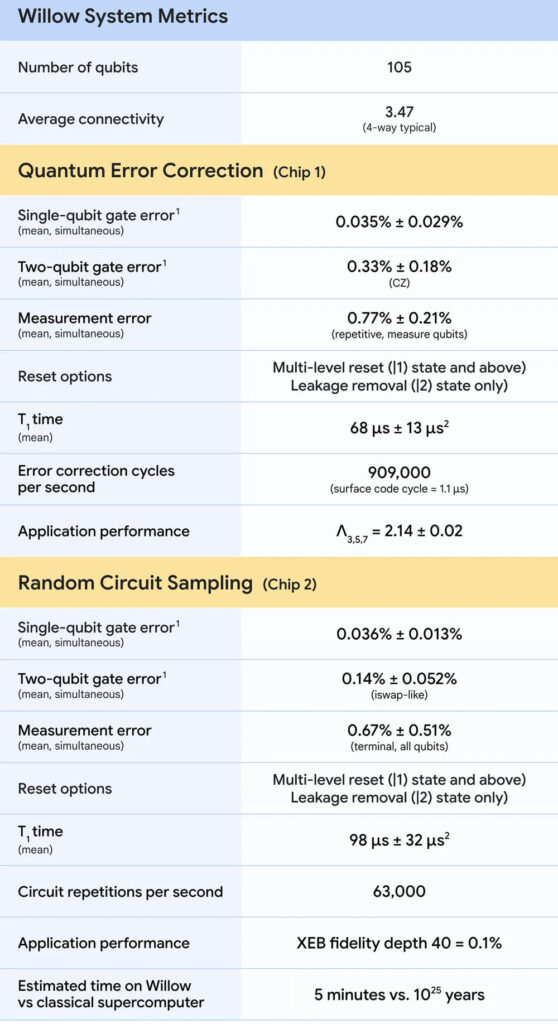

Earlier this week, Google introduced Willow, a 105-qubit quantum processor, fabricated in a state-of-the-art facility in Santa Barbara. The chip achieved exponential error reduction with increasing qubits, a milestone in quantum error correction first conceptualized in 1995. Willow also excelled in the random circuit sampling (RCS) benchmark, completing a computation in under five minutes that would take the fastest classical supercomputers an estimated 10 septillion years, a timeframe that dwarfs the age of the universe.

Willow's design emphasizes quality over quantity, integrating quantum gates, qubit resets, and readouts with unparalleled precision. These advancements promise a pathway to practical, large-scale quantum computers capable of solving problems inaccessible to classical systems, from quantum chemistry simulations to optimization tasks.

Scientific Breakthrough or PR Strategy?

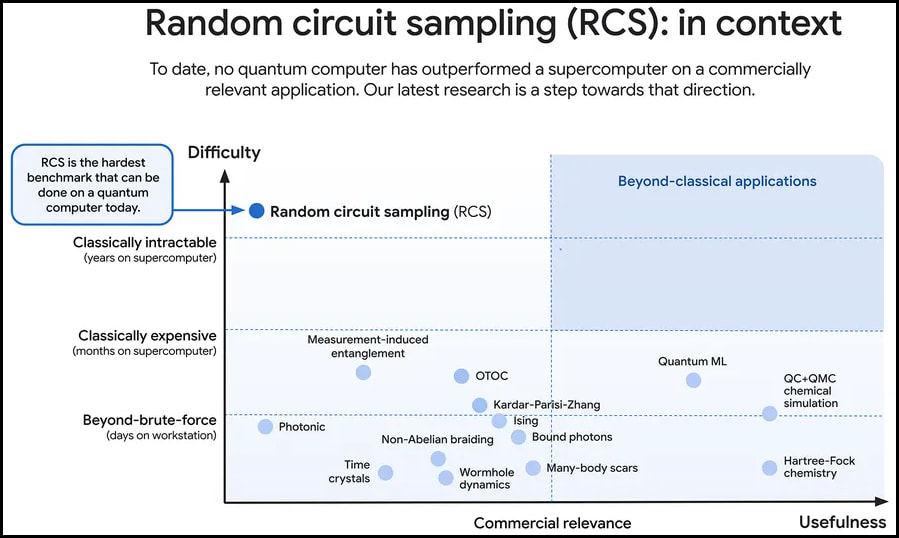

Google's RCS benchmark measures a quantum computer's ability to produce distributions difficult to replicate on classical systems. Willow's results affirm the machine's computational supremacy in executing a task reliant on quantum entanglement and superposition. However, physicist Sabine Hossenfelder notes that RCS, while technically challenging for classical systems, has no practical application. She and other analysts emphasize that RCS success, though significant scientifically, does not imply immediate quantum utility.

Critics like Jeffrey Scholz suggest that the RCS benchmark is tailored to favor quantum processors, likening it to simulating quantum phenomena — an inherent advantage for quantum systems. Scholz further cautions that extrapolating Willow's results to more complex computations, such as those needed for cryptographic breakthroughs, involves leaps in scale and accuracy that are far from realized.

Encryption remains safe. For now.

While quantum computers theoretically pose risks to cryptographic systems via algorithms like Shor’s, Willow and other current-generation chips fall far short of practical application. For instance, Willow represents an early-stage Noisy Intermediate-Scale Quantum (NISQ) system, still grappling with noise and scalability challenges.

IBM, a leader in quantum computing, announced in December 2023 Condor, the first quantum processor to surpass 1,000 qubits, boasting 1,121 superconducting qubits. However, this is still an NISQ system, bound to the same practical constraints. More recently, IBM launched the Heron, a processor optimized for accuracy leveraging the powerful Qiskit platform, enabling it to execute circuits with up to 5,000 two-qubit operations — a benchmark in quantum circuit complexity and stability.

Breaking encryption like RSA-2048 requires millions of error-corrected logical qubits to execute algorithms like Shor’s. Currently, both Willow and IBM's Heron and Condor chips fall significantly short.

Going beyond the hype

Despite valid criticisms, Willow represents a pivotal step forward in quantum technology, particularly in error correction. Achieving “below-threshold” error rates, where increasing qubits reduces overall errors, was a theoretical milestone now experimentally validated. Such progress supports the feasibility of building scalable, reliable quantum systems.

However, practical quantum computing remains years — if not decades — away. Bridging the gap between current NISQ systems and fully error-corrected machines requires breakthroughs in qubit coherence, error correction, and quantum algorithms. Collaboration and transparency across academia and industry will be essential for accelerating this progress.

Now would be the time to start thinking about strengthening your encryption . I Dont think AES-512 has much longer .