Apple's latest initiative to bolster cloud security and privacy has been unveiled, inviting security researchers to scrutinize and verify the integrity of its Private Cloud Compute (PCC) system.

The announcement introduces new resources, including a comprehensive Security Guide, a unique Virtual Research Environment (VRE), and expanded Apple Security Bounty rewards designed to promote independent verification of PCC's security and privacy protections.

This is a significant move to promote transparency, as Apple has now publicly released resources previously available only to select security researchers and auditors. This includes access to the VRE, which replicates the PCC node environment, allowing researchers to evaluate its privacy claims by running inference models and inspecting core software components.

Along with this, Apple has expanded its Security Bounty program to incentivize vulnerability reporting, offering up to $1 million for critical security flaws that compromise PCC's integrity.

Understanding Private Cloud Compute (PCC)

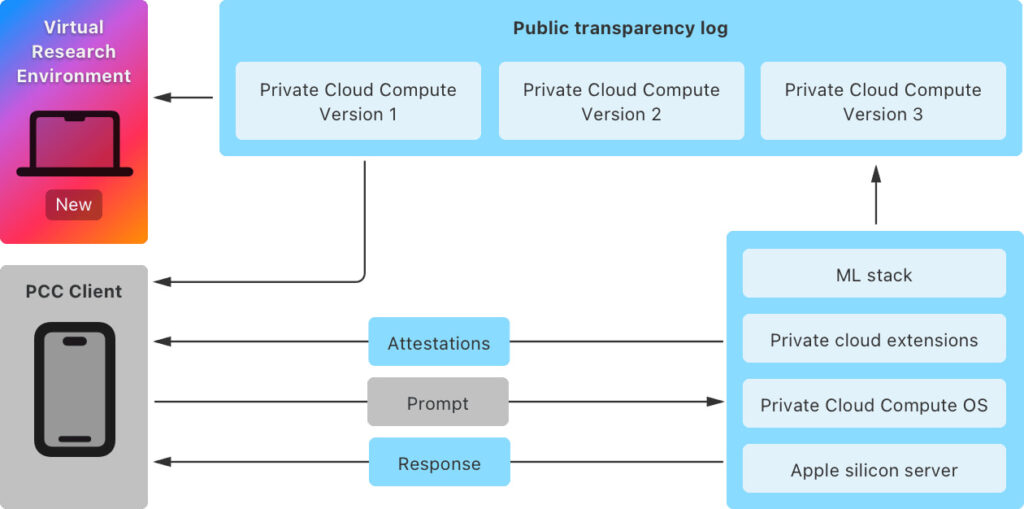

PCC is a critical part of Apple Intelligence service, handling computationally demanding AI tasks with robust privacy measures. It mirrors Apple's device-level security model, ensuring that even in the cloud, user data is highly protected.

Apple's Private Cloud Compute Security Guide provides in-depth technical documentation on how these protections work. This guide breaks down how PCC uses hardware-based attestations to maintain an immutable and verifiable security foundation, preventing targeted attacks and ensuring transparency through consistent logging.

A key focus of the guide is how PCC ensures non-targetability and privacy of user requests. The architecture allows users to inspect the software running within Apple's data centers, providing an unprecedented level of transparency in AI processing.

Virtual Research Environment: A first for Apple

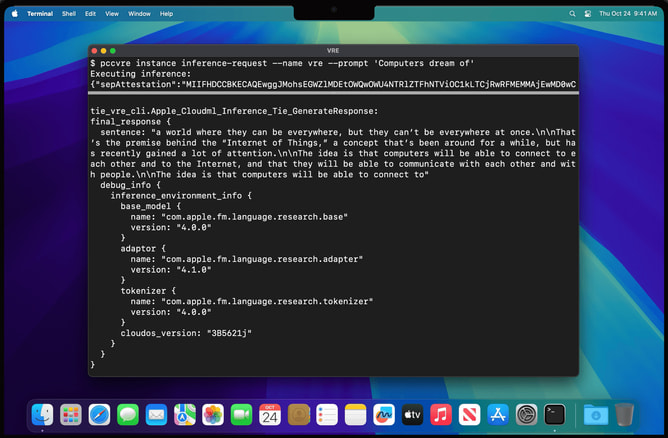

The VRE marks the first time Apple has offered such a tool for its platforms. It enables researchers to inspect PCC's security mechanisms directly from their Mac, replicating the conditions of a PCC node with minimal adjustments for virtualization.

Apple

Notably, the VRE includes a virtualized Secure Enclave Processor (SEP), a core component of Apple's security infrastructure, giving researchers a hands-on opportunity to test the robustness of this system. The VRE is available to developers using macOS Sequoia 15.1 on Apple Silicon Macs with at least 16GB of unified memory.

With this tool, researchers can:

- Boot PCC software in a virtual machine

- Verify software integrity via transparency logs

- Inspect and modify PCC software

- Conduct inference against AI models to validate security claims

Source code and expanded bug bounties

Further emphasizing transparency, Apple has released source code for key PCC components under a limited-use license. This includes projects like CloudAttestation and Thimble, which handle core security processes such as node attestation and log filtering, as well as srd_tools, the tooling behind the VRE. The code is available on GitHub, allowing researchers to dig deeper into how these elements ensure privacy and security in PCC.

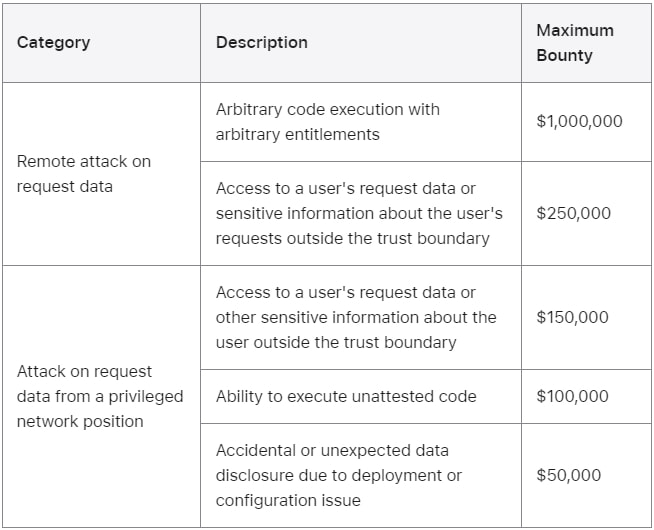

Alongside these resources, Apple has significantly enhanced its Security Bounty program for PCC. New bounty categories align with PCC's top security priorities, such as:

- Remote attacks on request data: Offering up to $1 million for arbitrary code execution vulnerabilities.

- Data disclosure risks: Up to $250,000 for access to user request data outside the trust boundary.

- Network-based attacks: Up to $150,000 for vulnerabilities that exploit privileged network access.

Other categories, such as unauthorized code execution and accidental data leaks, are also eligible for rewards ranging from $50,000 to $100,000. Apple promises to evaluate every report based on the impact on user privacy and the quality of the exploit demonstration, even if the vulnerabilities do not fit neatly into predefined categories.

Apple

Apple's goal with PCC is to provide industry-leading privacy and security for cloud AI services while maintaining a high level of transparency. By opening its systems to the broader security community, Apple hopes to foster trust and collaboration, ultimately improving the overall security of its AI infrastructure.

For those interested, Apple encourages researchers to begin by exploring the Private Cloud Compute Security Guide and the Virtual Research Environment, available in the latest macOS developer preview, and to submit any vulnerabilities through its expanded bounty program.

Leave a Reply