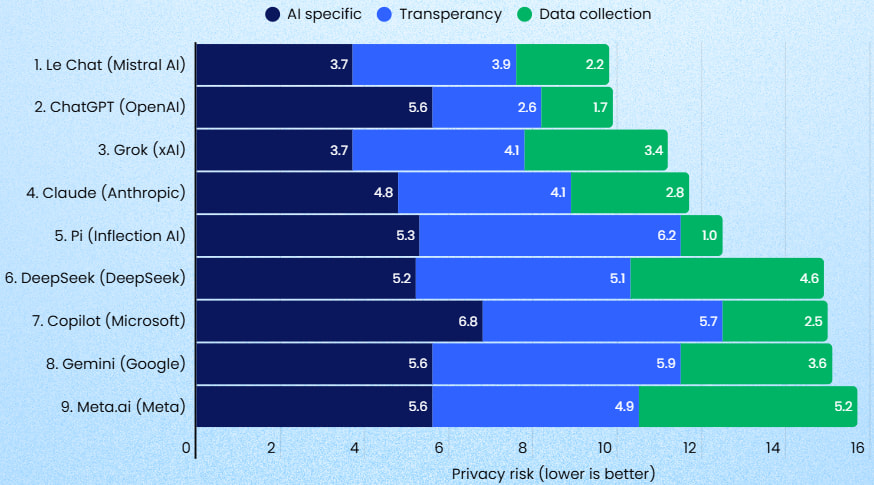

Incogni evaluated the data privacy practices of leading generative AI (Gen AI) platforms, revealing significant disparities in transparency, data collection, and user consent.

French startup Mistral AI’s “Le Chat” model emerged as the most privacy-conscious, while offerings from tech giants Meta, Google, and Microsoft ranked among the most invasive.

The study, conducted between May 25 and 27, involved a rigorous analysis of nine popular LLM platforms across 11 criteria grouped into three categories:

- user data in training

- platform transparency

- data collection/sharing

Researchers applied weighted scoring to emphasize privacy-sensitive issues, such as whether user prompts are used to train models and whether users can opt-out.

Incogni, a data privacy research and rights management firm owned by cybersecurity company Surfshark, aims to demystify how AI platforms handle user data. The company’s investigation focused on major AI services such as ChatGPT (OpenAI), Claude (Anthropic), Copilot (Microsoft), Gemini (Google), Meta AI (Meta), Grok (xAI), Pi AI (Inflection), DeepSeek, and Le Chat (Mistral AI).

Mistral AI and ChatGPT most privacy-respecting

Mistral AI, a French firm known for developing open-source LLMs, claimed the top spot in Incogni’s overall privacy ranking. Le Chat collects minimal data, avoids invasive sharing practices, and provides users with some control over how their prompts are used. Although it lost points in transparency, particularly in how clearly it explains training data practices, it made up for it with low collection and limited third-party data sharing.

ChatGPT, developed by OpenAI, placed second. It stood out for having the clearest privacy policy and FAQ structure, explicitly allowing users to opt out of prompt-based model training (unless providing feedback). However, the researchers flagged gaps in how OpenAI discloses details about training data sources and the removal of personal data from datasets.

Incogni

Big Tech platforms raise red flags

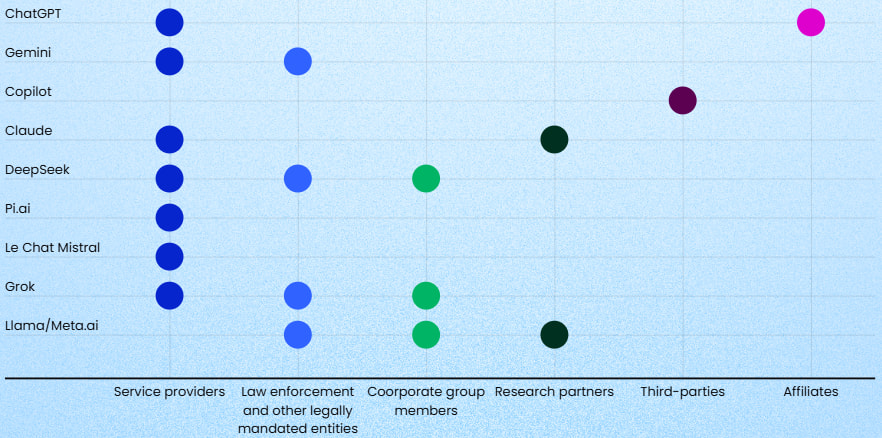

Meta AI was found to be the most privacy-invasive, followed by Google’s Gemini and Microsoft’s Copilot. These platforms were penalized for broad data collection, ambiguous or fragmented privacy policies, and lack of clear opt-out mechanisms for training data use. For example, Gemini and Meta AI do not appear to allow users to prevent their prompts from being used to train future models.

Meta AI, in particular, raised concerns by collecting a wide range of sensitive data such as usernames, addresses, and phone numbers and sharing it within its corporate group and with research collaborators. Microsoft’s Copilot, despite claiming not to collect data on Android, showed contradictory behavior across platforms, leading researchers to assign it the same lower score as its iOS version.

Incogni

Anthropic’s Claude models claim never to use user inputs for training but lack clarity on how input data is shared or protected. Meanwhile, Pi AI and DeepSeek had the least informative privacy policies, which were simple but vague, leaving researchers with limited visibility into their practices.

All platforms were found to rely on “publicly accessible sources” for training, a term that could include scraped social media posts or other personal data. Notably, ChatGPT, Gemini, and DeepSeek also draw information from third-party security and marketing partners, while Claude reportedly uses datasets from unnamed commercial vendors.

Many platforms failed to respect common privacy control signals like robots.txt or “do not index” tags, with OpenAI, Google, and Anthropic among those reported to disregard these directives.

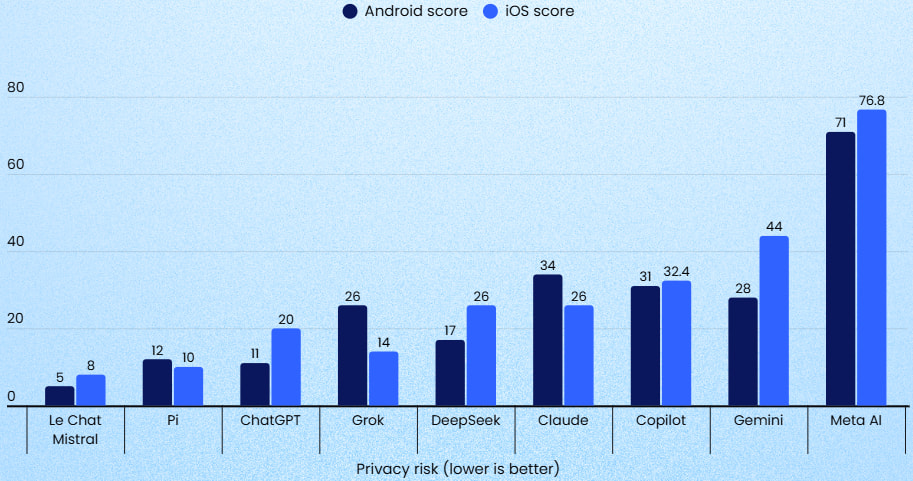

Mobile versions of the AI platforms were also assessed. Le Chat again performed best, with minimal data collection and no significant sharing flagged on either iOS or Android. In contrast, Meta AI’s mobile apps collect and share a wide array of data points, including precise location, phone numbers, and usernames. Gemini and Claude also collect substantial user data, including app interactions and contact details.

Incogni

In general, users worried about privacy are recommended to opt out of training data use when given the option and avoid using platforms with unclear privacy policies or opt-out options entirely. In general, using AI platforms through web browsers with strong privacy protections is preferable to using mobile apps.

Leave a Reply