A rapidly trending app called Neon, which incentivizes users to record their phone calls and sell the audio to AI companies, has been pulled offline after a severe security vulnerability exposed private call data, transcripts, and phone numbers to any logged-in user.

The discovery was made by TechCrunch, which revealed that Neon's backend servers failed to enforce access controls on sensitive user data.

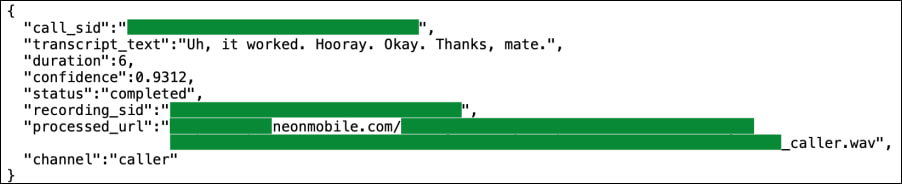

The reporters used the traffic inspection tool Burp Suite to analyze the app's network activity and found that any authenticated user could access the audio recordings, transcripts, and metadata of calls made by other users, including phone numbers, timestamps, call durations, and revenue earned through the app's incentive program. The flaw affected both the call content and metadata, and was confirmed with live test data generated by TechCrunch reporters.

TechCrunch

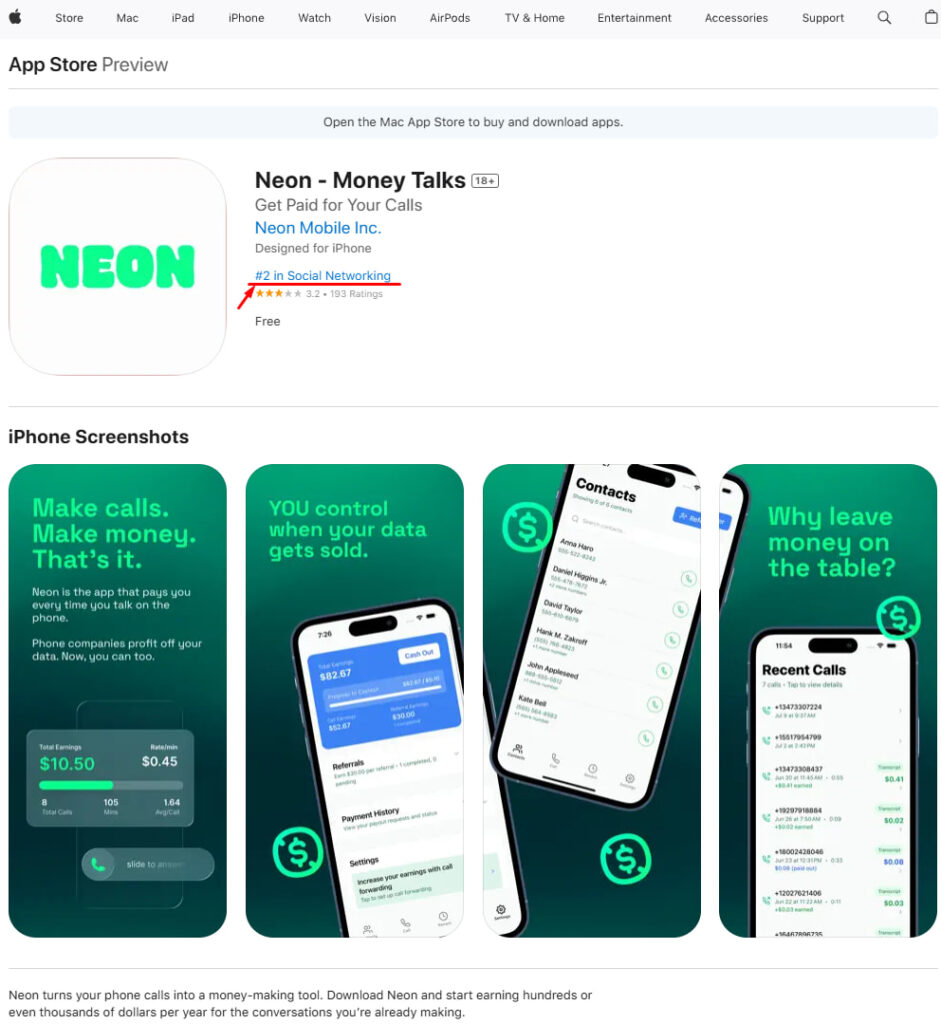

Neon, which launched just a week earlier for the iOS and Android platforms, had already reached the top five free iPhone apps in the US. The app marketed itself as a way for users to monetize their own phone conversations, paying up to 30 cents per minute and as much as $30 per day for call activity, with the resulting data being resold to AI companies for model training and testing.

CyberInsider

The app was created by Alex Kiam, a previously low-profile founder operating the company from a New York apartment, per public business filings. Kiam did not respond to previous inquiries from reporters, but took the app offline shortly after being alerted to the data leak. A follow-up email sent to users acknowledged a temporary suspension of service to “add extra layers of security,” though it notably failed to disclose the actual breach or its extent.

At the core of the vulnerability was Neon's failure to restrict backend access based on user identity. The app's API would respond to requests from any logged-in account by serving not only the requestor's own data, but also call transcripts and audio links belonging to other users. The exposed transcripts revealed full-text conversations, and the audio links, while only capturing the voices of Neon users, could be downloaded by anyone with the direct URL. Publicly exposed metadata included both parties' phone numbers, timestamps, and financial details linked to Neon's payment scheme.

A deeper concern arises from the possibility that some users were unknowingly recording real-world conversations with non-Neon users, motivated by the app's pay-per-minute structure. This raises serious questions about potential violations of wiretapping laws, especially in jurisdictions that require two-party consent for recordings.

Neon operates under a sweeping set of terms of service that give the company the right to sell and redistribute user data without restriction. According to its privacy policy, Neon claims a global, irrevocable, and sublicensable license to store, modify, reproduce, and distribute users' recordings. The company also asserts that it anonymizes data prior to resale, but it remains unclear how effective those anonymization processes are, particularly given the leaked phone numbers.

Users should stop using the app, change their phone number if possible, and switch SMS-based 2FA to alternative, more secure methods. In general, it's better to avoid apps offering payments in exchange for sensitive data entirely.

Leave a Reply